Non-transactional persistent resource managers are tricky in context of database fail-over. A short discussion shines the light on some of the problems.

Non-Transactional Persistent Resource Managers

File systems and queuing systems are in general non-transactional persistent resource managers that are typically used outside transaction control, in many cases because the technology chosen does not support transactions in the first place (some implement transaction functionality, many do not; here I focus on those that do not). Files can be created, deleted or their contents manipulated; queue elements can be enqueued or dequeued from queues (in FIFO order, priority order, or any other supported queuing policy).

Because operations like inserting, updating or removing data items from non-transactional persistent resource managers are executed outside transaction control, they (a) cannot be coordinated in the same transactions of transactional resource managers, and (b) in case of a failure, the state of the data items is unclear since the non-transactional resource manager does not provide any transactional guarantees in failure situations (see the appendix below for the taxonomy on data consistency).

For example, when a data item is enqueued in a queue and in that moment the queuing system fails (by itself or because the underlying hardware failed) then there is no defined outcome for that enqueue operation. It could be that the data items is properly enqueued and all is fine. It is possible that the data item was not enqueued at all. Worse, it might be possible that the data item was enqueued, but not all supporting data structures were fully updated inside the queuing system, leaving it itself in an inconsistent state still requiring recovery actions.

Best Effort Consistency

From a software architecture viewpoint, non-transactional persistent resource managers are best-effort systems and this has to be kept in mind when they are used and deployed. With the rather reliable hardware servers nowadays this limiting property can easily be forgotten or pushed aside as the mean time between failures is rather long.

However, when data consistency is critical, and non-transactional resource managers have to be used, effort can be put in place to mitigate at least some of the potential data consistency problems.

Data Consistency through Data Duplication

A fairly straight-forward approach to integrate non-transactional resource managers is to store the data items twice: once in the non-transactional resource manager, and once in a transactional resource manager. Any update or delete must take place in both systems (however, as discussed, cannot be done inside a single transaction across both).

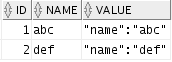

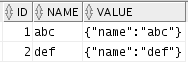

For example, every item enqueued in a non-transactional queue could also be inserted into a relational table. If an item is dequeued, it is also removed from the transactional table. The logic could first enqueue the data item into the queue, and when successful, insert it into the table. Upon dequeue, the same applies. The items is first dequeued from the queue, and if successful, removed from the table. The invariant is that a queued data item is only considered consistent if it is enqueued in the queue and also present in the table at the same time.

Studying only the enqueue case, there are several failure cases:

- Enqueue fails.

- Enqueue succeeds, but system fails before the insert into the table took place.

- Enqueue succeeds, but insert in the table fails.

In the first case, the enqueue fails and the insert into the table will never take place. So the invariant is fulfilled. In the second case the item is in the queue, but not in the table. The invariant is violated. The system can now decide how to deal with the situation after the failure: it can remove the queue item, or it can insert it into the table. The same situation occurs in the third case.

Underlying this approach is the requirement that it is possible to check for the presence of the data item in the non-transactional as well as transactional resource manager in order to determine if the data item is present after a failure. For example, in the second case above, after the systems comes back up, the following check has to occur: (a) for every item in the queue an equivalent items must be in the table and (b) for each item in the table there must be an item in the queue. If there is a data item in one, but not the other resource manager, the invariant is violated and the inconsistency has to be removed by either removing or adding the data item so that none or both of the resource managers have the data item (and therefore the invariant holds).

Database Fail-over Data Loss Recovery

When a database fails over, as discussed in earlier blogs, it is possible that no data loss occurs. In this case the transactional resource manager remains consistent with the non-transactional resource manager.

However, a data loss is possible. This means that one or more data items in the transactional resource manager that were present before the fail-over are not present anymore after the fail-over. In this case either the items need to be added to the transactional resource manager again (based on the content in the non-transactional resource manager), or they have to be removed from the non-transactional resource manager also.

The particular strategy is to be determined in a specific software architecture, however, with data duplication this choice can be made as needed and required by the application system at hand.

Effort vs. Consistency

The outlined approach above (which can be applied to any non-transactional resource manager that can check for the existence of data items) fundamentally makes the non-transactional resource manager pseudo transactional by pairing it up with a transactional resource manager. This is implementation effort made in order to provide data consistency.

Less effort might mean less consistency. For example, it would be sufficient to store the data item in a table only for the duration until the data item is safely enqueued in the queue. Once that is the case the data item could be removed from the table. While this would ensure the consistency during the enqueue operation, it does not ensure consistency during the dequeue operation since not every type of failure during a dequeue operation would be recoverable.

In a data loss situation because of a database fail-over there would be no way to reconcile the two resource managers if not all data is available in both at the same time. In a particular situation that might be acceptable, but in general it would not.

Effort vs. Performance/Throughput

Additional effort takes additional resource, both in storage space as well as processing time. The price for making the system more consistent is a possibly a slower system, with less throughput, and higher storage space requirements. Again, this is trading off non-functional with functional properties.

Summary

The outlined issues are present in all systems that deploy non-transactional persistent resource managers. The outlined solution is a generic one and in specific situations alternative, more efficient approaches might be possible in order to guarantee data consistency.

As discussed, the choice of non-transactional persistent resource managers paired with the need for data consistency can be expensive from an engineering and system resource perspective. While the choice is not always a real choice, it is definitely worth evaluating alternatives that provide the required functionality in a transactional resource manager for comparison.

This blog is the last in the series around transactional and non-transactional resource manager use in context of database fail-over. Clearly, the software architecture has to be aware of the fact that a database fail-over is possible and that a data loss might occur along the way due to network errors, increased network latency and system failures. Some of the architectural problems have been discussed, and some solutions presented as starting points for your specific application system development efforts.

Go SQL!

Appendix: Taxonomy

The software architecture taxonomy relevant for database fail-over can be built based on the combinations of resource manager types used. In the following the various combinations are discussion on a high level (“x” means that the software architecture uses one or more of the indicated resource manager types).

| Software Architecture |

Transactional Persistent |

Non-transactional Persistent |

Non-transactional and Non-persistent and rebuildable |

Non-transactional and Non-persistent and Non-rebuildable |

| Consistent |

x |

|

|

|

| Consistent |

x |

|

x |

|

| Possibly consistent |

x |

x |

|

|

| Possibly consistent |

x |

x |

x |

|

| Possibly consistent |

x |

x |

x |

x |

Disclaimer

The views expressed on this blog are my own and do not necessarily reflect the views of Oracle.